Research

I’m excited about physical AI. I work on diffusion and flow models for generation, representation learning, and control. Generative world models serve as a natural testbed for these ideas: they learn to capture the structure of physical reality through deep visual representations, and we can train policies directly in this imagined space. I’m also fascinated by scaling foundation models and reinforcement learning toward superhuman capabilities, and how these advances connect to alignment and the profound economic transformation that AI will bring.

Highlighted Work

See my Google Scholar for a full list of publications.

The Promise of RL for Autoregressive Image Editing

Saba Ahmadi*, Rabiul Awal*, Ankur Sikarwar*, Amirhossein Kazemnejad*, Ge Ya Luo, Juan Rodriguez, Sai Rajeswar, Siva Reddy, Chris Pal, Benno Krojer, Aishwarya Agrawal

NeurIPS'25

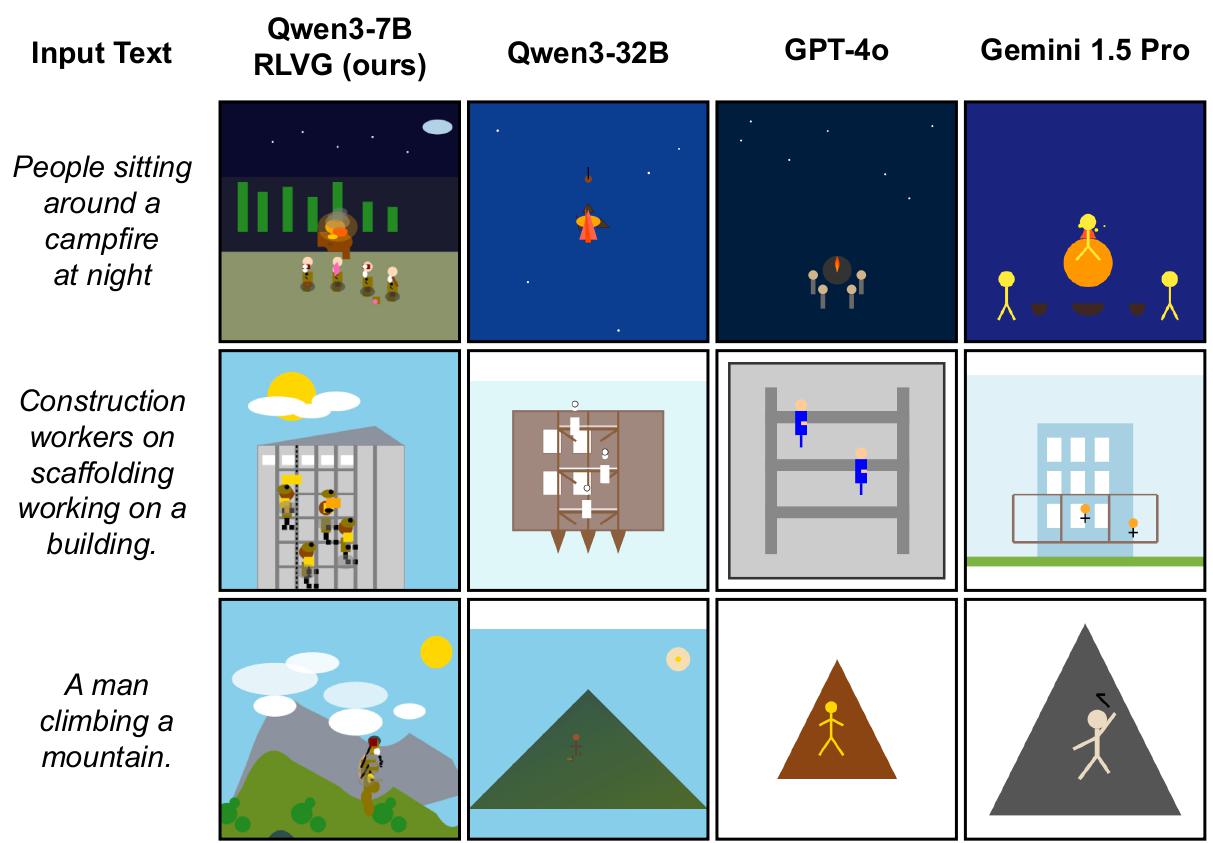

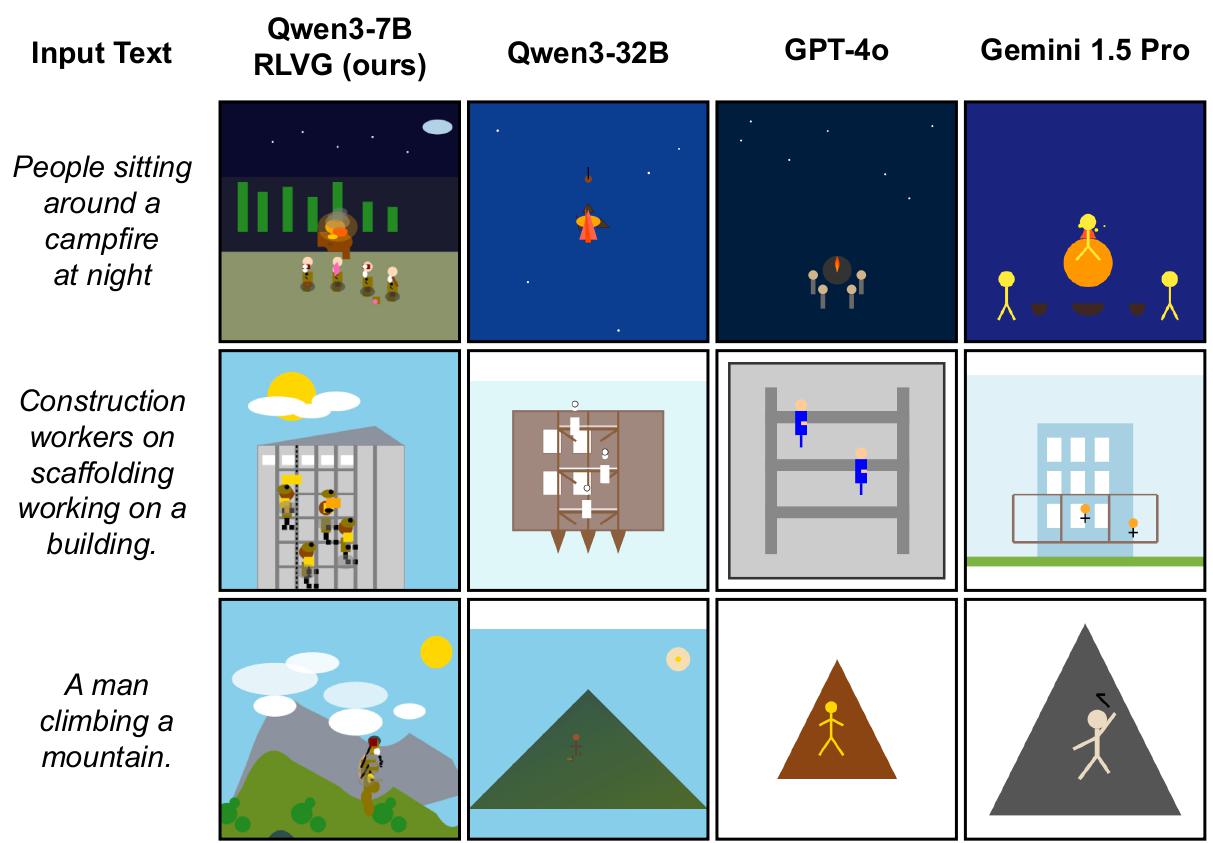

Rendering-Aware Reinforcement Learning for Vector Graphics Generation

Juan A. Rodriguez∗, Haotian Zhang∗, Abhay Puri, Aarash Feizi, Rishav Pramanik, Pascal Wichmann, Arnab Mondal, Mohammad Reza, Rabiul Awal + 6 others

NeurIPS'25

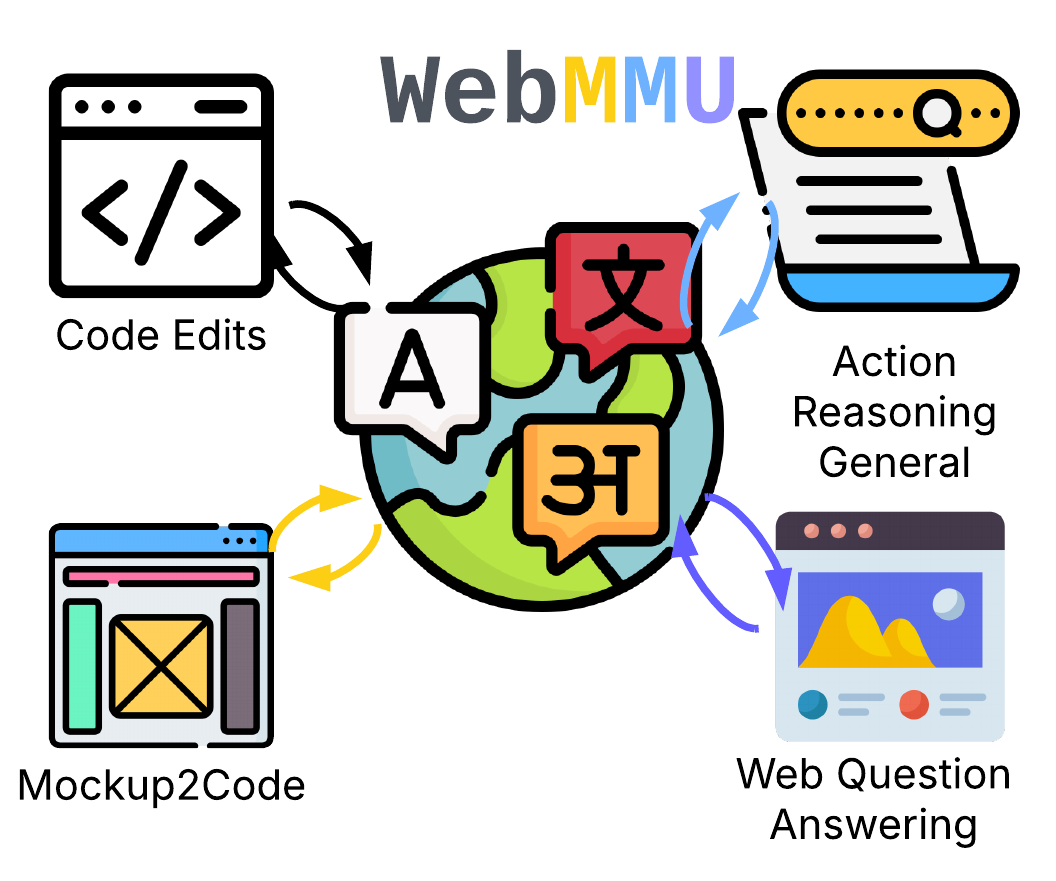

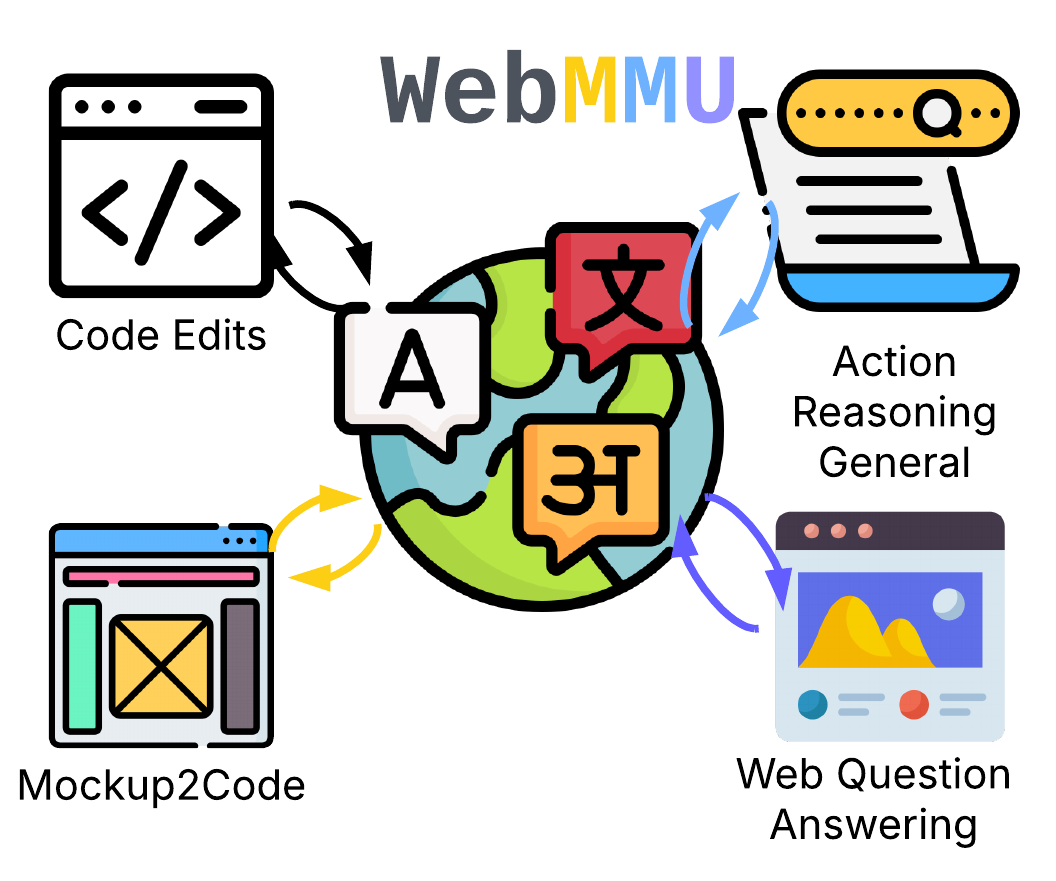

WebMMU: A Benchmark for Multimodal Multilingual Website Understanding and Code Generation

Rabiul Awal, Mahsa Massoud, Aarash Feizi, Zichao Li + 9 others

EMNLP'25 (Main)Oral

CTRL-O: Language-Controllable Object-Centric Visual Representation Learning

Aniket Rajiv Didolkar*, Andrii Zadaianchuk*, Rabiul Awal*, Maximilian Seitzer, Efstratios Gavves, Aishwarya Agrawal

CVPR'25 Spotlight at MAR Workshop @ CVPR'25

VisMin: Visual Minimal-Change Understanding

Rabiul Awal*, Saba Ahmadi*, Le Zhang*, Aishwarya Agrawal

NeurIPS'24

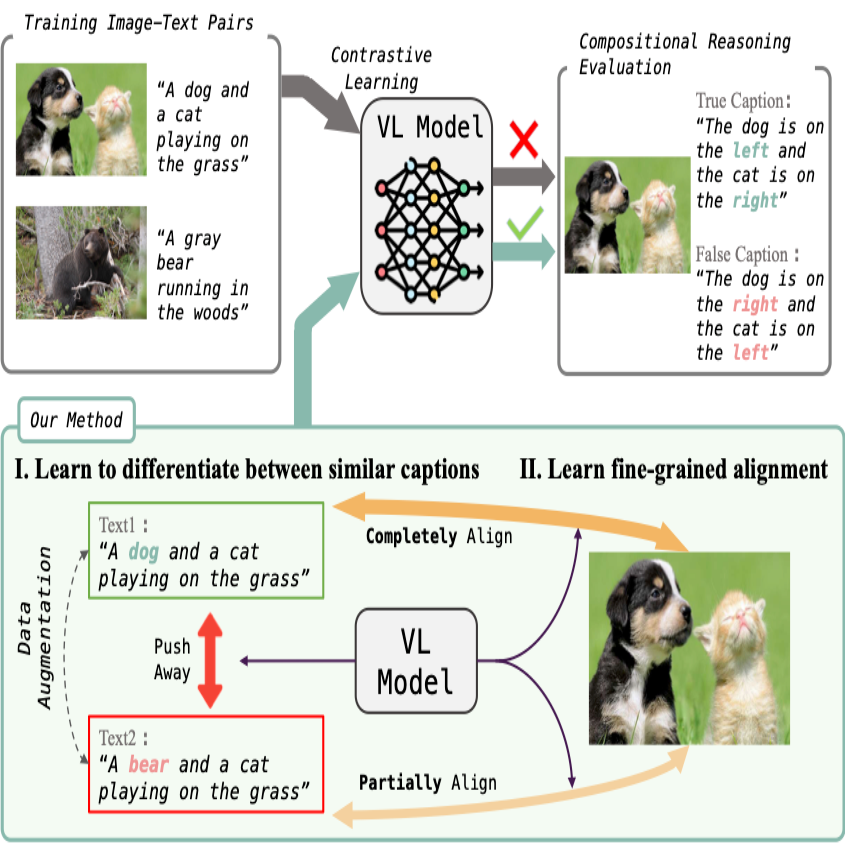

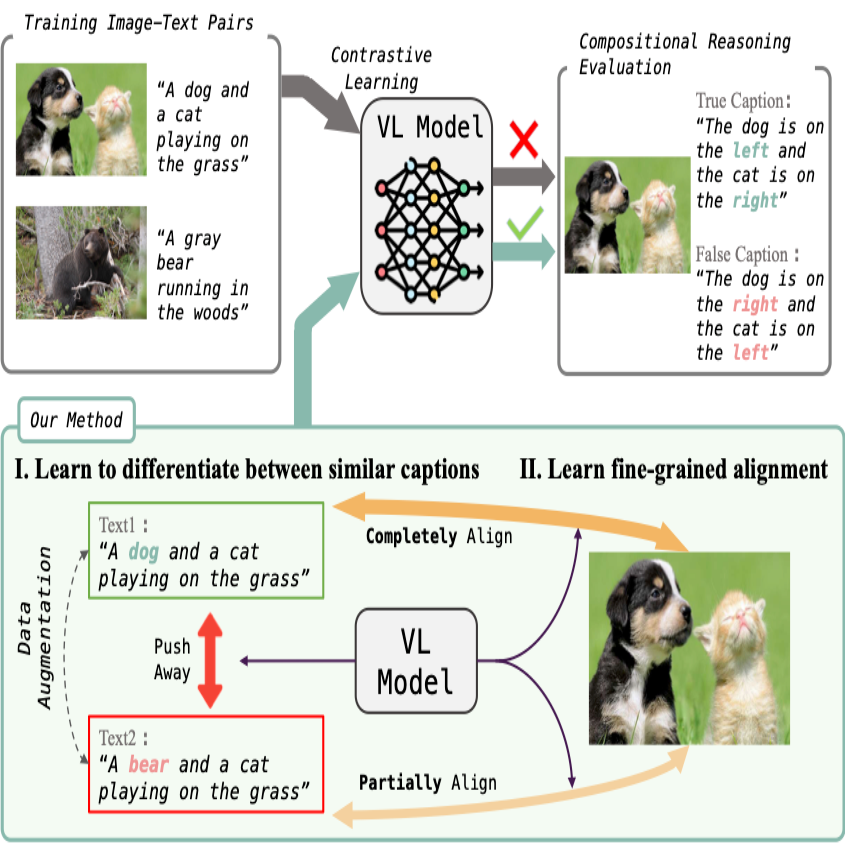

Contrasting intra-modal and ranking cross-modal hard negatives to enhance visio-linguistic fine-grained understanding

Le Zhang, Rabiul Awal, Aishwarya Agrawal

CVPR'24 Spotlight at O-DRUM Workshop @ CVPR'23

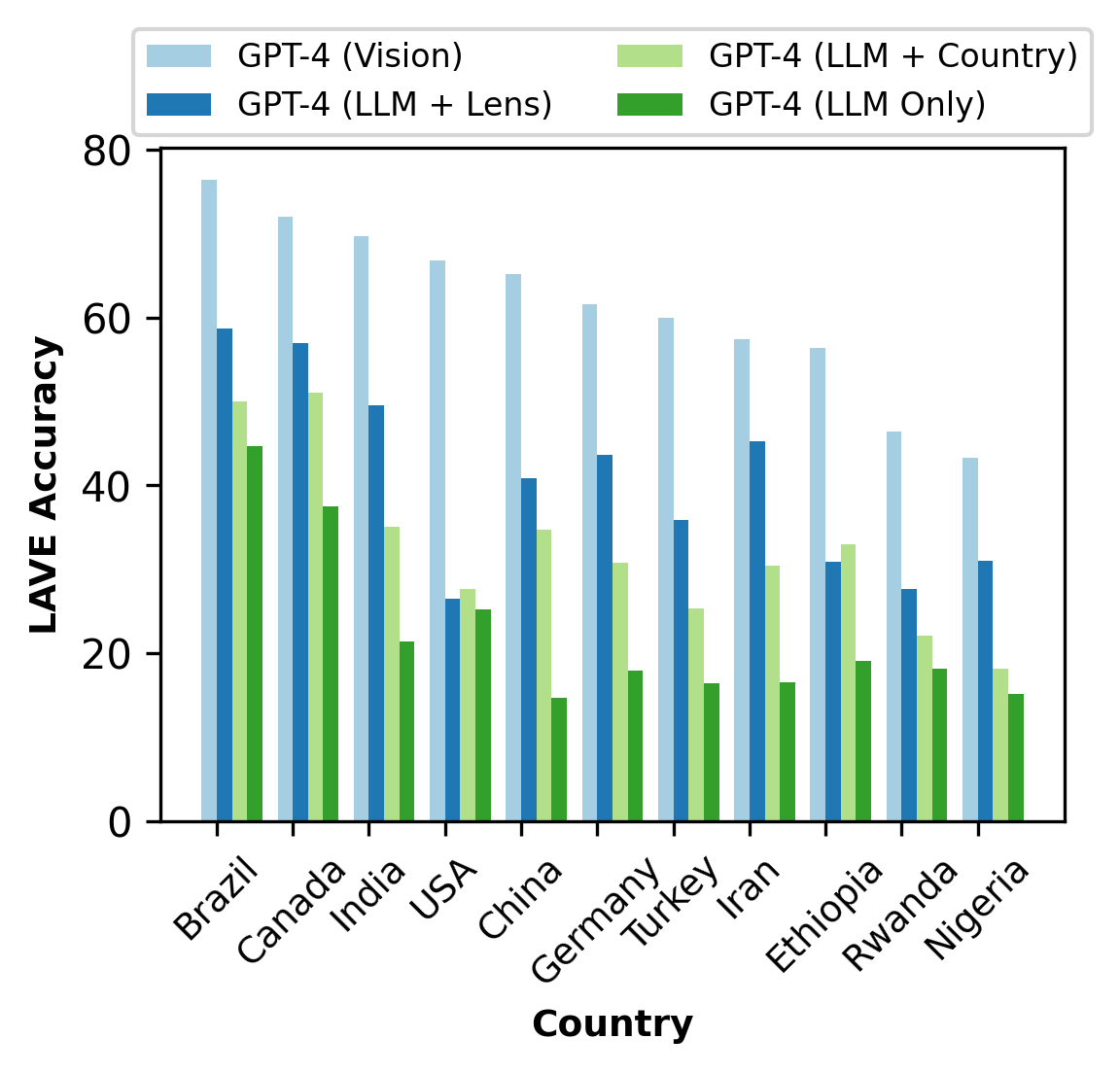

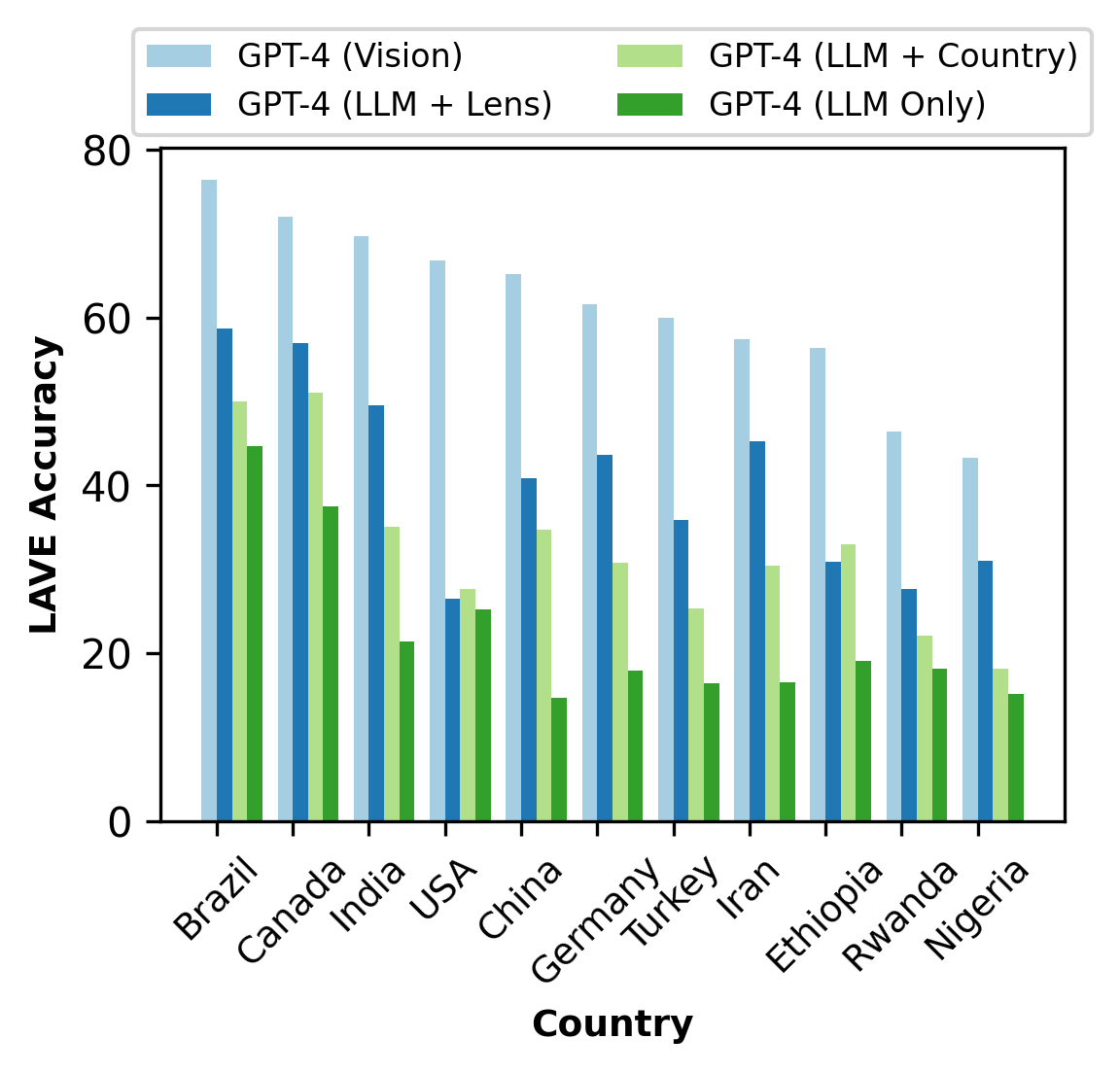

CulturalVQA: Benchmarking Vision Language Models for Cultural Knowledge

Shravan Nayak, Kanishk Jain, Rabiul Awal + 5 others

EMNLP'24 Oral

Announcement

NEW

World Modeling Workshop

February 4-6, 2026 • Mila, Montreal

I'm co-organizing this workshop that brings together researchers working on world models, generative models, and their applications in AI systems.