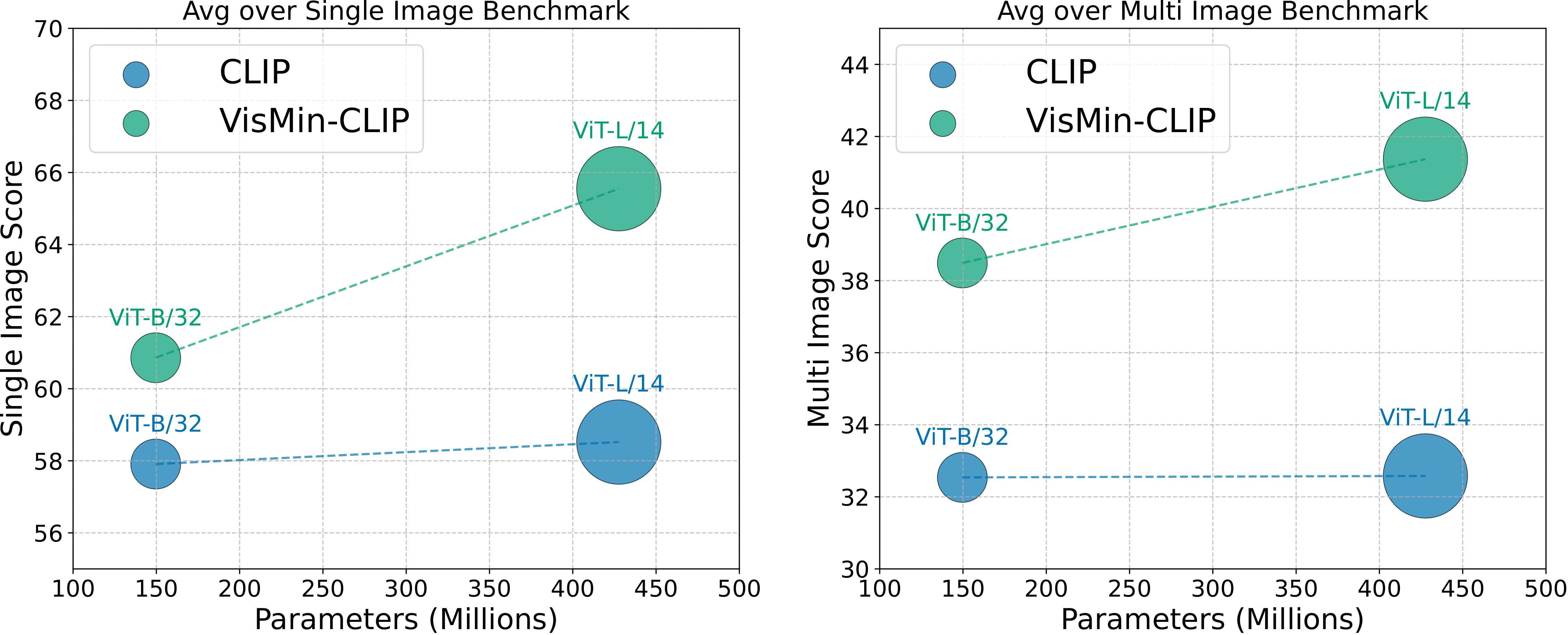

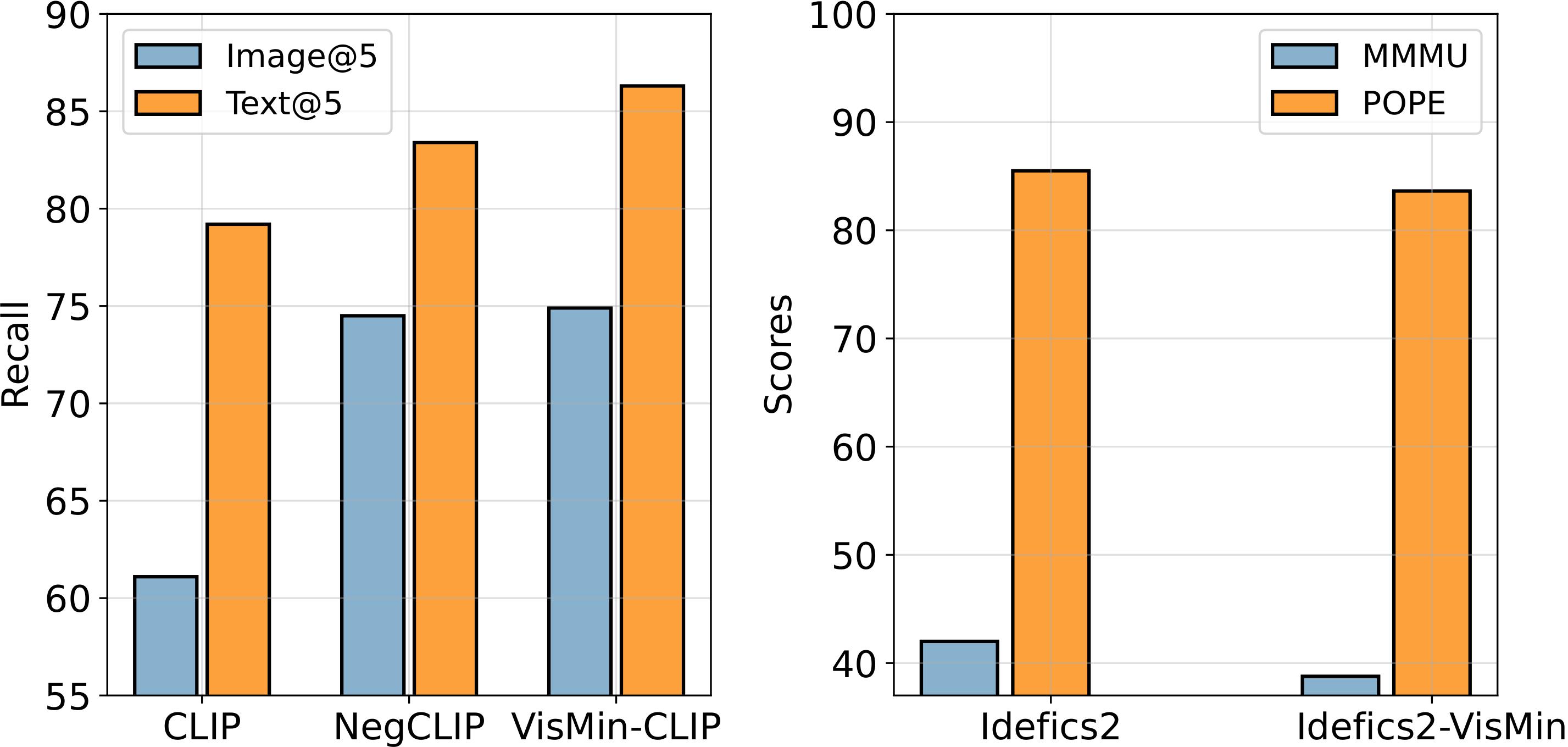

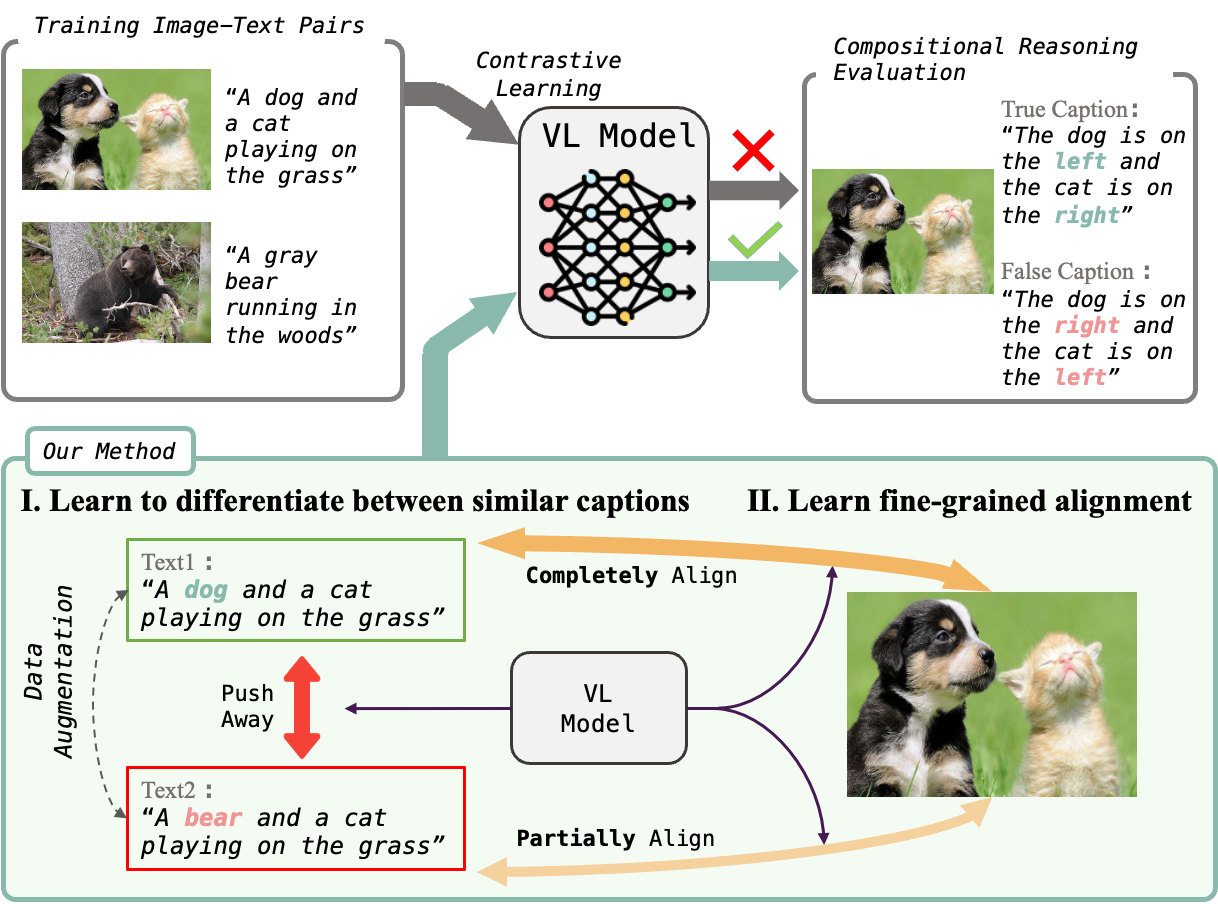

Setup. We evaluated a range of state-of-the-art Visual Language Models (VLMs) and Multimodal Large Language Models (MLLMs) including well-known foundational models like CLIP, SigLip, and emerging MLLMs like Idefics2 and GPT-4V. These evaluations covered image-text matching tasks, where models chose the correct image from two captions or the correct caption from two images, and adapted visual question answering formats for MLLMs, assessing alignment with captions across paired images.

Our analysis revealed that text tasks generally outperform image-based tasks, indicating alignment challenges between images and captions. In object and attribute recognition, models demonstrated strong capabilities, with foundational Visual Language Models (VLMs) often outperforming Multimodal Large Language Models (MLLMs) in image-related tasks. However, both types of models faced significant challenges in spatial reasoning, highlighting a crucial area for future advancements. Notably, human participants outperformed models in complex scene comprehension, suggesting areas for model improvement.